Documented

a web-based application that helps makers associate their documentation information to the objects they are 3D printing. Data is associated with the model using tags that can be embedded in the print and read through a webcam.

a web-based application that helps makers associate their documentation information to the objects they are 3D printing. Data is associated with the model using tags that can be embedded in the print and read through a webcam.

May 2019 - April 2020

Figma, Illustrator, JavaScript, P5

Google Teachable Machine,

Prusa 3D Printers

Lead Product Designer - from concept to delivery

Conducting interviews, paper and digital wireframing, low and high-fidelity prototyping, conducting usability studies, accounting for accessibility, and iterating on designs.

Maker Culture is empowering an increasingly diverse audience to create artifacts. People from different backgrounds and varying levels of technical skills are building a wide range of projects, such as woodworking and smart interactive devices that use electronics. Makers commonly share their projects and the related lessons that they learned with the broader community via online maker platforms, such as Etsy, Instructables, and Craftster. Typically, documentation facilitates this sharing of knowledge and can include information such as images, videos, and texts, which describe the procedures that the maker followed to create the final artifact. Moreover, these documents contain information regarding what, how, and why the object was built, which provides a clear insight into the project.

End-users (i.e., the makers themselves or people interested in rebuilding or learning about the project) use the documentation to reflect on these pieces of information and form their own understanding of the object and how it was built. Reflection is a cognitive process with either a purpose, or an outcome, or both, and is applied in situations where the material or the object being reflected upon is new. Engaging in such reflective processes can be challenging when it comes to physical objects because the artifact and its documentation typically exist as two separate entities. When the users look at the physical object, they may be unable to obtain information regarding how it was built and its design rationale. Therefore, the users typically have to study the object and its documentation separately and form connections on their own. In this thesis, we ask if embedding the documentation onto the object being made will promote self-reflection and whether this facilitates a deeper understanding of the object and its design process.

Designing a web-based application that creates a new form of interaction with documentation of physical objects.

Documented is a web-based application that assists users in creating interactive documentation, triggered by 3D printed tags embedded onto 3D models of the object. The application guides users through a new pipeline begining with organization of the documentation material and finishing with a documentation being ready to be viewed and shared through a 3D model of the object.

The application consists of three sections, encoding, processing, and retrieval of data.

1. Encoding Data

In this stage, users are provided with tags to which documentation data can be associate. The tags are designed to be both human readable and machine detectable. The users are allowed to connect text and up to four files to each of the tags. These files could be picture files, audio files, video files, or design files. As such, from a computational standpoint, there is no limit on how many tags can be created. However, in the current implementation, it was limited to four because it allows the users to attach one of each type of file to each tag and lets the user be creative in how they want to organize their documentation data.

2. Embedding Tags

After the completion of the first task, the users are navigated to a 3D modelling sketch in Tinkercad, an easy-to-use 3D CAD design tool. You can download the model here. The users are asked to position the provided 3D models of the four tags onto the 3D model of the object they are documenting, by simply dragging and dropping the tags on the model. The model can then be printed with the embedded tags using any 3D printing technique.

3. Retrieving Data

Now that the 3D model of the object is embedded with tags, users can scan the tags using a webcam, and upon detection of the tag, all the information associated with those tags will be displayed on the screen for the users to navigate through. This research was published at 2021 CHI Conference on Human Factors in Computing Systems. You can find the paper here.

I employed a Human-Centered Design (HCD) in our methodology. This methodology helped me gain insight from the maker community, which is highly active in problem-solving and solution-building. Furthermore, I was able to take advantage of the maker community’s wide range of backgrounds, skills, and knowledge to identify design opportunities and evaluate the built prototype.

1. Inspiration

I divided the inspiration phase into two studies. At this stage, my goal was to understand the makers’ documentation practices, the types of data that they capture during the building process, and the different visualization formats of those data. To do this, I first conducted an artifact analysis study on common documentation formats that exist in maker communities. After which, I held interviews and brainstorming sessions with professional and hobbyist makers to conclude the findings and generate ideas on implementing a solution.

Artifact Analysis - I chose artifact analysis as only by examining how people use and conceptualize documentation we could identify the design goals of the new interaction.

I selected four highly viewed examples of documentation on the assumption that they were more likely to have relatively higher-quality documentation and, therefore, would be good sources for the analysis. From Instructables, one of Becky Stern's projects was selected, where she builds an RFID ring using metalworking techniques and a small RFID tag. From the Make:Projects website, we selected Clarissa Kleveno's project, where she makes an LED ring that lights up when it is correctly aligned with an electromagnetic field. From Build in Progress, Tiffany Tseng's Spin Turntable was selected, in which she builds a turntable connected to a mobile phone application and allows makers to create GIFs of their made objects. Lastly, from YouTube, we selected Adam Savage's projects, where he makes a novel over-engineered bottle opener in collaboration with Laura Kampf, who is another YouTuber and maker.

I selected 30 questions for analyzing the strengths and limitations of the varied documentation formats, types of media used for sharing information, and ability to access information by an expert or non-expert. Through the analysis, I found that:

Interview and Brainstorming Sessions - I chose to conduct a semi-structured interview with makers as it would allow me to get a better understanding of their documentation practice, but also, by bringing them into the project, I could use their expertise and experiences to ideate a solution.

I held interviews and brainstorming sessions with five professional and hobbyist makers. My goal was to better understand the makers' documenting practices and identify what they perceived as the best way to connect documentation-related information to the physical object being built. Four out of the five participants identified that attaching documentation data to the physical object was highly beneficial for them as makers, and argued that it would help them better understand the documentation.

From these data, I set three design goals for the project:

2. Ideation

I developed this particular prototype by making smaller prototypes that were designed to test the specific parameters that I identified as potential ways to enhance interaction with the documentation. Furthermore, I created multiple iterations and tested them thoroughly to figure out the limitations of the technologies at hand. My goal was to create a pipeline, which begins from the creation of the documentation and finishes with the document being ready to be viewed and shared through a 3D model of the object.

I created the application using P5, a JavaScript library. P5 allowed me to quickly create a simple user interface (UI) for the application so that users could upload their files to each tag. I chose to use 3D printing technology to embed tags onto 3D models of the objects. To make sure those tags are printable with the common printers that are accessible to most makers, I used a Prusa i3 MK3S printer and ran a test on what types of tags I could use. I was looking for tags that would be both human-readable and machine-detectable. I want users to understand what data they should expect to find on the object by just seeing the object’s 3D model. At the same time, the tags should be easily scanned by the built prototype so the system can retrieve the information that is attached to them.

I explored three types of tags. In the frst series, I added a simple shape embedded on top of the 3D model of the object. In the second series, I added simple shapes as engravings on the 3D models. And fnally, for the third series, I tried adding patterns onto the 3D models. I found that considering the limitation of the printer and its level of accuracy printing the first series of embedded simple shapes would work best.

The next step was to attach the selected tags onto the 3D models of the objects. I needed the system to be user-friendly so that all the makers, including those with limited knowledge of the technology, could be able to move the tags on the object and place them on their desired locations. Tinkercad provided a user-friendly way to model the object. Users are navigated to a premade sketch on Tinkercad that includes an example 3D model and some tags models. Users are also able to import other objects 3D models and attach the tags onto them.

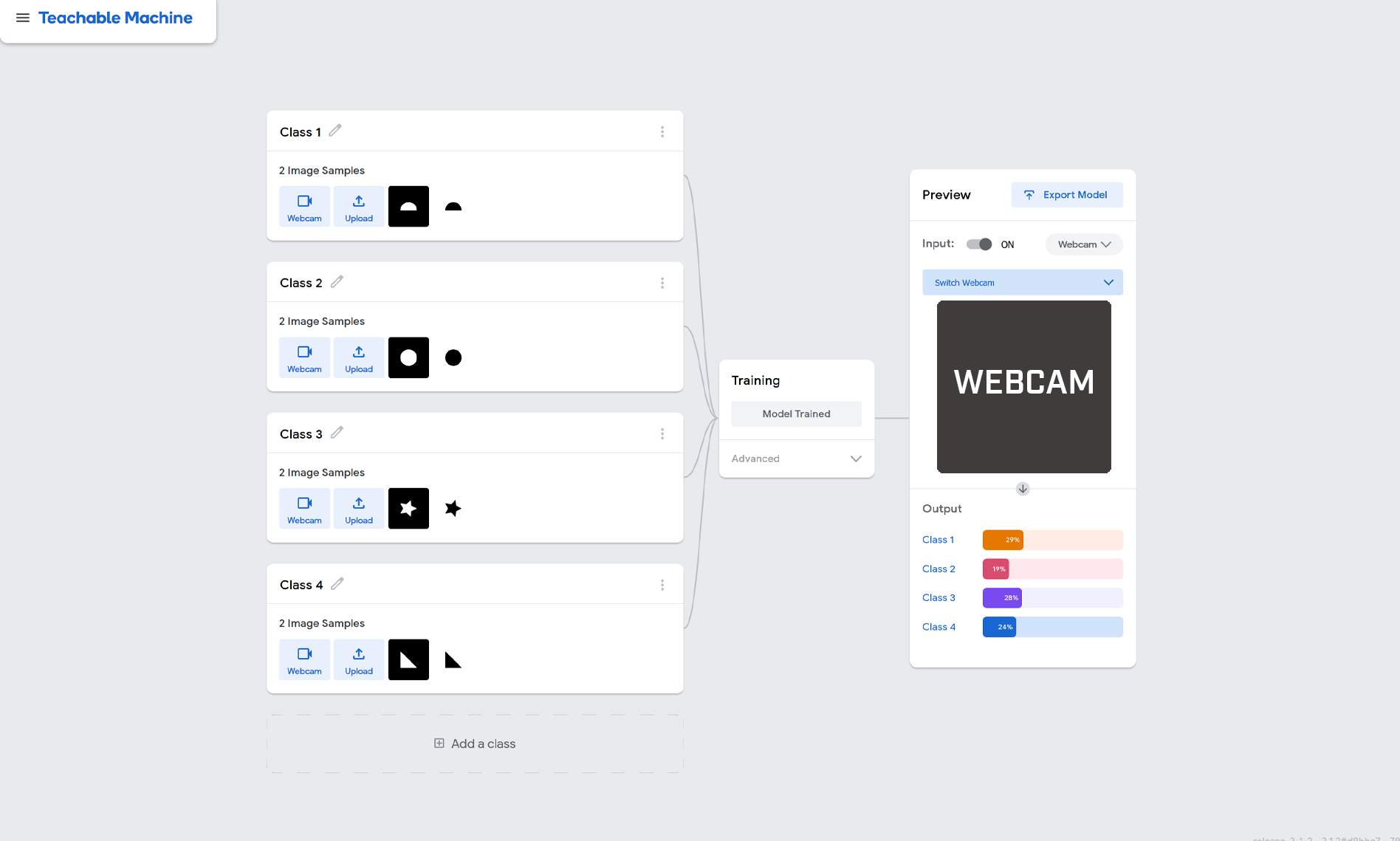

The final step that I needed to address was how to detect the embedded tags and display the information associated with them. I decided to use Teachable Machine, which is a software developed by Google that allows people to create machine learning models for their websites. They can teach the machine to recognize artifacts by uploading an unlimited number of pictures, audios, and poses. I then used ML5, another JavaScript library that focuses on machine learning, to create a webcam feed. The webcam would then detect the tags embedded onto the built model. After, the system detects the probability of each of the cases defined in the Teachable Machine and matches the tag to the item with the highest confidence rating.

3. Implementation

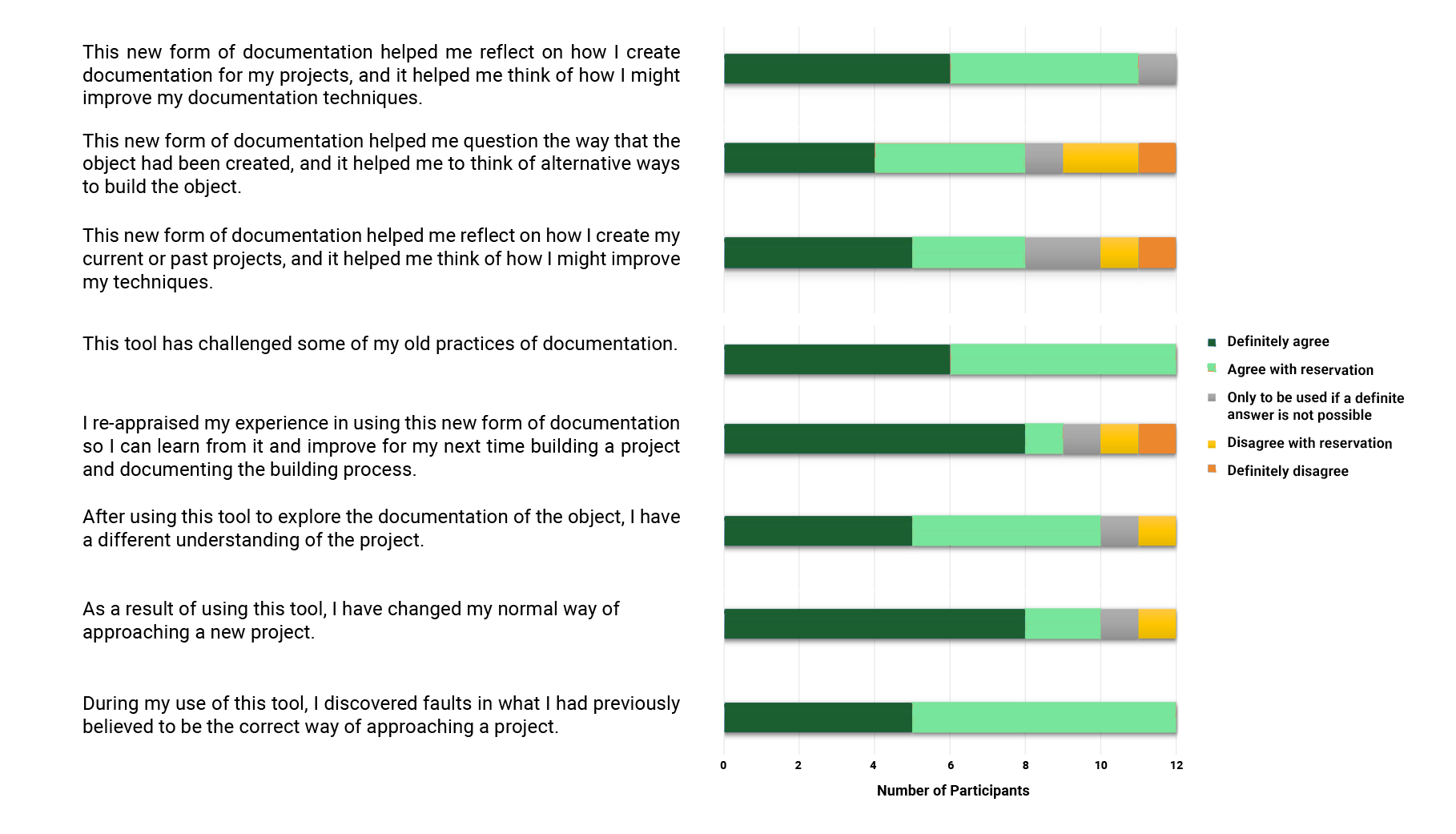

To test the built prototype, I ran an evaluation study with 12 professional and hobbyist makers. My goal was to evaluate the functionality of our proposed solution and measure how successful it is in promoting more reflective learning from the project documentation. I also used a survey to measure how much reflections makers had on the object itself and its fabrication process.

Participants reflected on the interaction and described it as “fun”, “easy”, and “less intimidating". Our survey found that all 12 participants felt that the experience provided them with a deeper understanding of both the object and the fabrication techniques used in building it. The system provided them with information behind the inspiration, motivation, and goal of the project. Furthermore, the direct relationship between the object and its documentation allowed them to directly look for specific fabrication techniques that they were interested in and reflect on those techniques.

Overall, I found that associating documentation information with physical tags that get embedded in the printed object helps people reflect on three aspects of the process and the object:

More information about the findings of the research can be found in the publication.

As a first step, in this research, I discuss how people might embed documentation information. In the near future, I am interested in exploring how people might use such documentation styles to support knowledge creation, including developing new skills, building new theories, and evaluating design decisions. After addressing some of the current implementation limitations (e.g., support for collecting documentation data, multiple tag detection, and testing other tag styles), I am interested in examining the application of the prototype in varied contexts. I am interested in examining how students might use this prototype in educational contexts to develop and transfer knowledge. I would also like to explore how such documentation might be perceived by users of mass-manufactured objects in the home contexts, for example, by embedding information such as instructions to construct and deconstruct IKEA furniture.

Due to the lack of time, I had to limit the number of participants that I conducted interviews within the ideation phase. By conducting more interviews, I could have better understood the challenges and difficulties that makers face when documenting their projects.

For the evaluation study, all the participants were design students and were not a representative sample of makers. Although the participants had a wide range of practices, they were from a narrow fled of makers with common background experiences. This narrowed the type of feedback that I could have received from our evaluation study.

Most of the research is conducted using qualitative techniques, but it is especially difficult to measure reflection in that process. For future projects, I would use quantitative techniques to measure user's reflections. This way, I would have a more precise comparison point between the designed prototype and current common formats of documentation.

Making and the documentation of making is something that people have always done. Although we no longer have to build things to address our primary needs, many still find that they can learn a lot through making. Accordingly, documentation is one of the main ways of promoting learning from the building experience. Researchers and interaction designers should think about how such tools can be used to enhance users’ understanding of projects and foster a more reflective experience.

In this work, I envision a novel addition to the current documentation styles that can be found online on various maker platforms (e.g., Instructables). For instance, the next time that you decide to build an object, you can frst start by 3D printing a model of the object to explore its documentation and reflect on the object before building it. Imagine having a bookshelf, where instead of books, you have 3D models of projects that you previously built, projects you were inspired by, or projects that you learned something from. When you decide to begin your next project, you can walk up to your shelf, look through the models, and easily access the documentation and the corresponding design decisions that went into building a given object.

We cannot ignore the relevance of this work in the current global public health crisis. The emergence of the COVID-19 pandemic has had large-scale effects on both local and global education systems; therefore, we need to address the necessity for new systems that can support learning inside the digital world. The consequences of this pandemic, such as social isolation and massive shifts in the dynamic and makeup of the workforce„ is changing the current zeitgeist and the public’s expectations. People have started refecting on what information and skills are essential for them to know or learn versus the things that they can live without. I believe that we will see a change in the line between what was considered formal and informal learning and a substantial shift towards the maker’s mindset, which will require new hybrid systems that would promote those learning styles.

I hope that the work presented here will inspire researchers and interaction designers in the future to focus on the importance of documentation in learning, and develop tools that use these technologies to enhance end-users’ refections about the making process.

This research was published at 2021 CHI Conference on Human Factors in Computing Systems. You can find the paper here. This research was also published at OCAD University. You can find the full thesis paper here.

This project was supported by NSERC RGPIN-2018-05950. Special thanks to our participants for their valuable time and efforts..This project was supported by NSERC RGPIN-2018-05950. Special thanks to our participants for their valuable time and efforts.